Design Team: Vivian Nguyen & myself

Role: UX Design Intern

Software: Figma

Duration: Jul 2025-Aug 2025

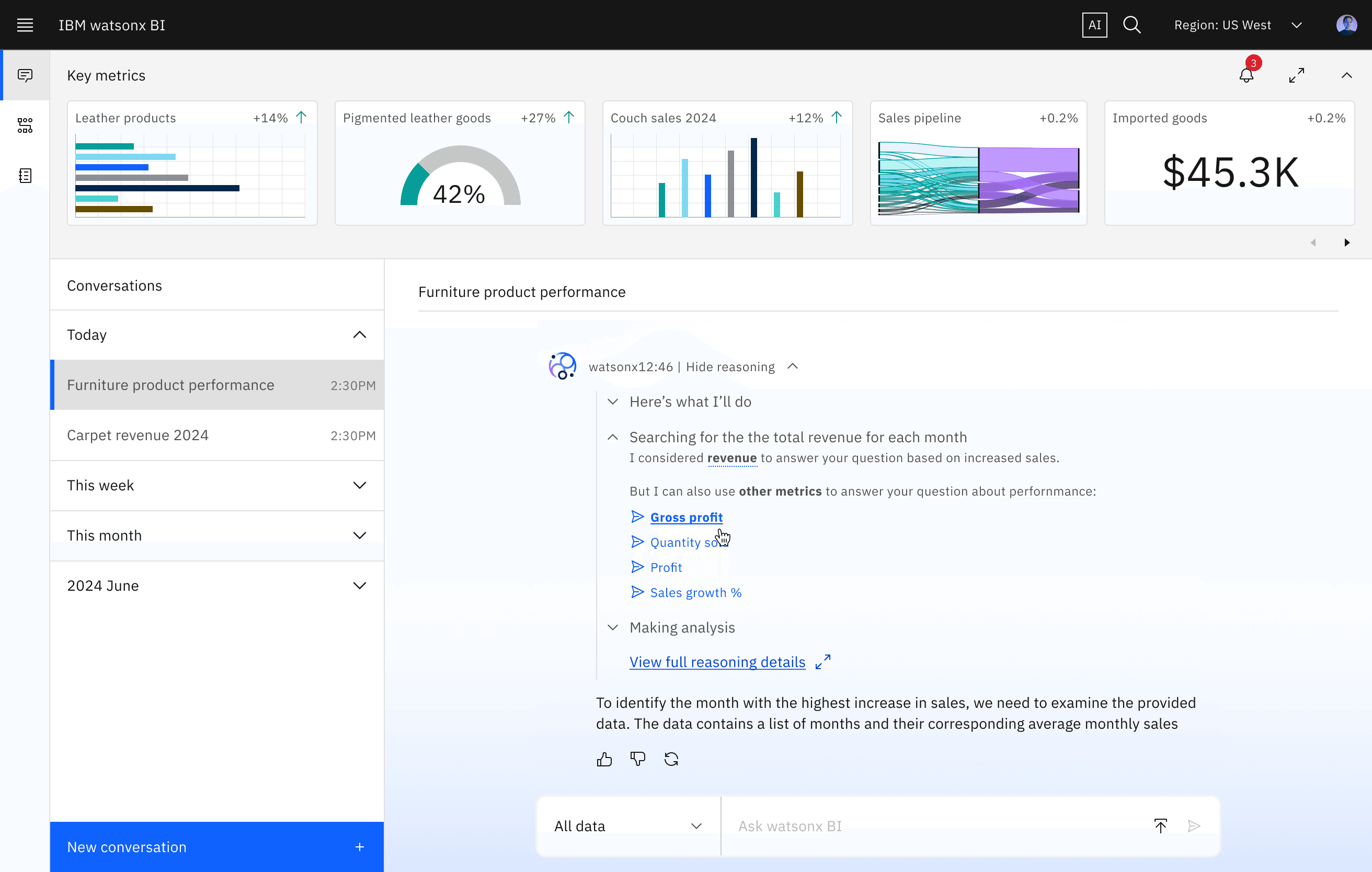

Watsonx Business Intelligence (BI) is IBM's newest AI-powered data analyst product.

The problem

The IBM Watsonx BI team was preparing to launch its newest AI data analyst, designed to help users quickly generate insights from complex datasets. However, user testing revealed a major barrier to adoption — 5/5 users did not fully trust the AI’s responses. Despite accurate outputs, participants expressed uncertainty about how the system reached its conclusions, raising concerns about transparency and reliability.

The design challenge:

🗣️ How might we enable Analytics Consumers to have confidence in the answers they see?

My design process

competitive analysis — design exploration — user testing — handoff

Competitive analysis

To understand how other AI tools establish user trust, I conducted a quick competitive analysis of leading AI chat platforms like ChatGPT, Perplexity, and Claude.

One key pattern stood out: each provided some level of chain-of-thought reasoning, whether through visible sources, step-by-step explanations, or summaries of how conclusions were reached.

In contrast, Watsonx BI presented answers without showing its reasoning, which, from initial user testing, seemed to make users hesitant to trust the results. After discussing my findings with my team, this insight became central to our design direction: making the AI’s thought process more transparent and interpretable.

Some (very quick) design exploration

With the product launch date approaching, we didn’t have time for extended exploration. After conducting a quick competitive analysis to understand how other AI tools surface their reasoning, I jumped straight into hi-fi designs for the Chain-of-Thought reasoning feature, which was made possible by leveraging the Carbon Design System to iterate quickly while ensuring consistency and preparing the feature for development.

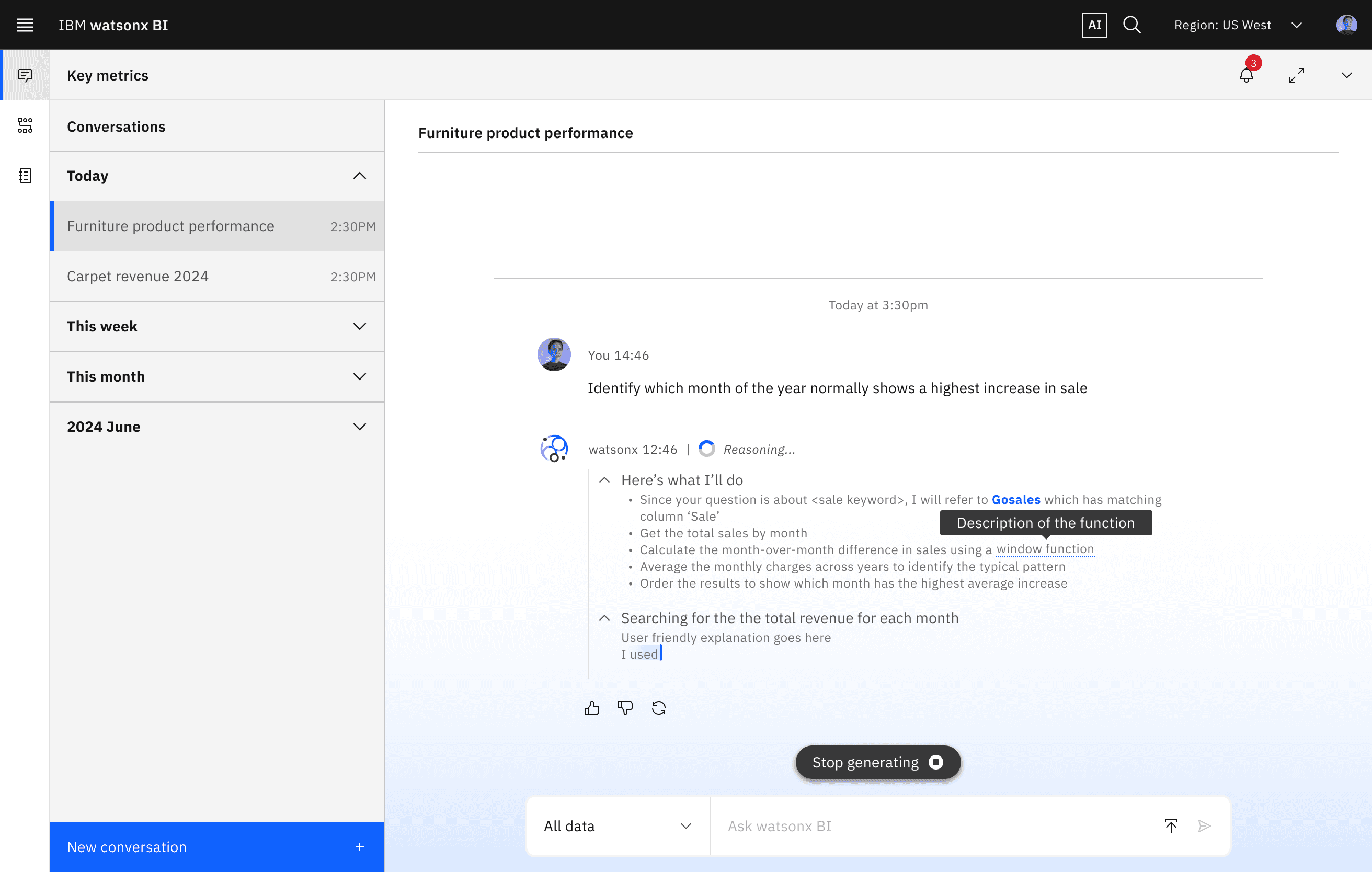

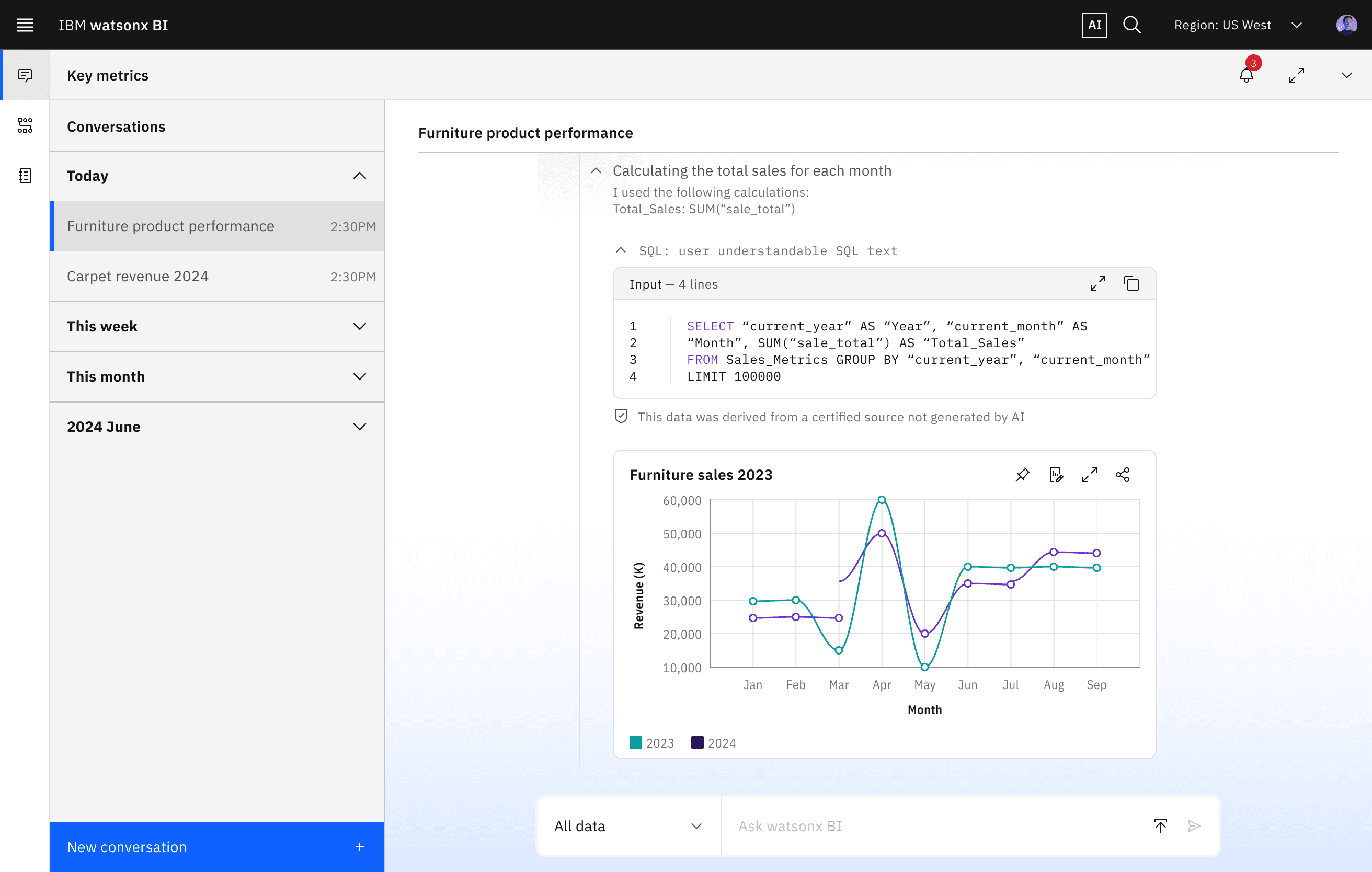

A key design decision I made was to display both the AI’s Chain-of-Thought reasoning and the underlying technical data, while hiding the SQL queries under an accordion. This progressive disclosure approach ensured that non-technical users weren’t overwhelmed, while still providing advanced users access to the detailed data behind the AI’s conclusions to build more trust.

REASONING IN-PROGRESS

REASONING EXPANDED

Time to validate!

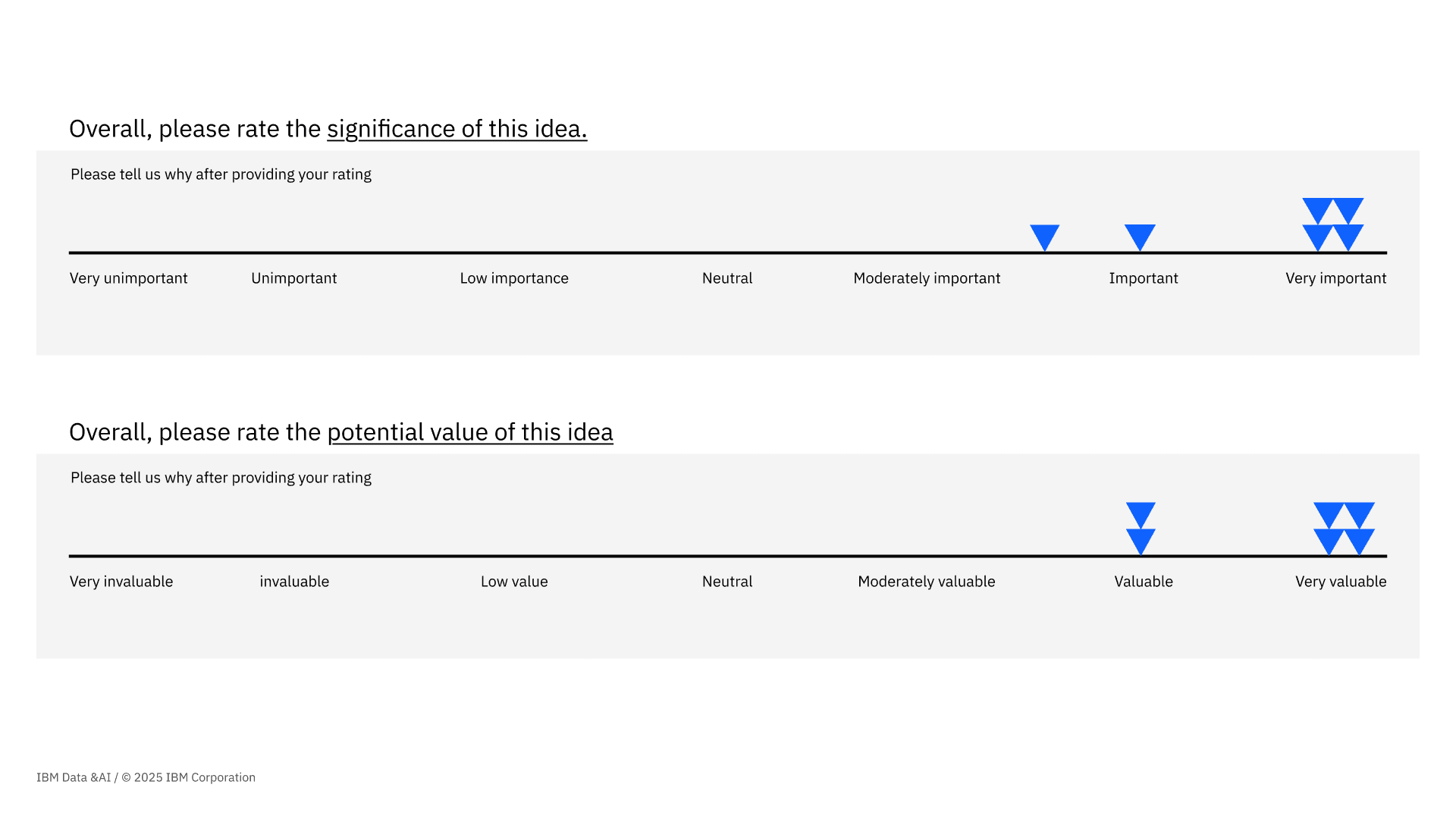

With the support of my mentor, Vivian, I conducted user testing sessions to validate the redesigned AI chat, presenting 6 participants with each flow and asking them to share their thoughts. In addition to qualitative feedback, we asked users to rate the perceived significance and value of each flow, helping us prioritize which areas of the new design were most impactful and where improvements were needed.

The results? User testing showed that the redesigned Chain-of-Thought reasoning feature led to over a 90% increase in user confidence and trust in the AI’s outputs, confirming that reasoning transparency effectively addressed users’ concerns.

Additional ideas that research-validated, but were not feasible in the given timeline:

INLINE DISAMBIGUATION

ASSET DISAMBIGUATION

Research synthesis + handoff

I synthesized the user research and testing insights into clear recommendations, presenting them in a meeting with stakeholders to align on the next steps. After incorporating feedback, I finalized the hi-fi designs and handed them off to developers with detailed guidance to ensure accurate implementation.